Kingsley Idehen posted a thoughtful article on URI, URL, and linked data this weekend. In the style of Q&A, the post concisely answers some of the most confusing questions about linked data. It explains the subtle distinction between URI and URL when dealing with the linked data. Moreover, the post implies that "a new level of Link Abstraction on the Web" is likely needed for us in order to efficiently consume the linked data Web.

After I left a comment for the post, however, I feel the issue deserve a second thinking. Before approaching forward, I pasted the main section of my original comment to Kingsley's post in the following.

Another thought I have, however, is that we may have three, in contrast to two, fundamental definitions on describing the Web. The two well-known ones are data and service; or in RDF we define Class and Property respectively. Until now, we assert the third one---link---to be nothing but a special form of data. The reality is, however, that this special form is so special that we may consider to give it a little bit honor so that it becomes the third member of the fundamental building block of the Web. That is, a link is not a data, and nor is it a service, but a link. Or with respect to your post, a URI is not a data, but a form of link, PERIOD.

I believe that this distinction, once it is made, could be important as well as valuable. A trick thing here is that, following this distinction we can start to think of other forms of links that is beyond URI (which is just a binary model). By contrast, we may start to invent the links in higher order, such as the link of links (metalink) or the thread of links (group link). Be honest, if the Web is moving towards a web of linked data (and I believe so since the Web data is more and more interconnected), we must breakthrough this traditional thinking of the link model. The key is, however, from today we start to think link to be link but not a data.

World Wide Web: from the dualistic view to the ternaristic viewThe thought that Web link is a fundamental element of the Web that is independent to data and service was originated when I wrote

a model of Web evolution.

(Actually, it could be traced back to January 2007 when I first started to think of how the Web evolves.) By observing the evolution of the Web, more and more I felt that data, service, and Web link are three equivalently fundamental elements of the Web. This interpretation of the Web is different from the classic dualistic view of the Web in which the Web is said to be built upon two fundamental first-class entities: data (which expresses the static description) and service (which expresses the dynamic action). In this classic dualistic model, Web link is a special second-class member that is partially static description and partially implied by dynamic action.

RDF is a typical design according to this dualism philosophy of the Web. In RDF, relation (RDF:Property) is a first-class entity along with the normal object entity (RDF:Class). While class expresses the static fact of the Web, relation expresses how the static facts are interacted to each other. Both the elements are equally fundamental. Two models with the identical classes may not necessarily be equivalent to each other since the properties that are among the classes could be different.

Now we need to start discussing a few subtle implication of this philosophical view of the Web.

By the dualism philosophy, a relation is first of all a service and secondary a link. For example, suppose there are two statements: (1) Mary is a teacher of John, and (2) Mary is a friend of John. Mary and John are two objects. "teacher-of" and "friend-of" are two relations. Philosophically, however, the primary meaning of each of the relations is a typical service defined in between the two objects. In the first relation, Mary provides a teaching service for John, by which a teacher-of relation is established. In the second relation, Mary provides a friendship service for John, by which a friend-of relation is established. Be note each of the

links is

a consequence of the respective service (and there could be other consequences as well) in contrast to

a prerequisite of the service. The dualism philosophy tells that service implies link and every link must be a consequence of a service. Moreover, no link actually makes sense if no services imply the link. Every link has a reason, which is a known service, conceptually (means that the service is unnecessarily implemented, however).

There is also another side of Web link according to this dualism philosophy. Once a link is implied by a service, it becomes a data. Unlike service that always leads to an action or a production, link describes certain static fact, which by the dualism philosophy is a data.

Therefore, link, which inherits the features from both of the first-class entities, is a special secondary entity in the dualistic view of the Web.

The ternarism (3 fundamental elements to model the world) philosophy to which I prefer may describe the same Web but in a different picture. By this philosophy, a link is not the consequence of a service and neither is it an unique type of data. A link is a

link, which in the ternaristic view of the Web exists without the need of being implied by a service or being stored in the form of data.

In the dualism world, wherever there is a link, it must exist a data that represents the link and a service (implemented or not) that implies the link.

In the ternaristic world, however, when there is a link, it may or may not exist a data that represents the link, and it may or may not

exist a service (let it alone implemented) that implies the link. A link is nothing but a pure connection

among (could be more than

between) the things.

In the dualism world, that one thing is linked to another thing is always due to some reason. In the ternaristic world, however, link is a matter of natural connection that does not require a reason to be existed. A link is as fundamental as a data or a service.

As we know, the Web is a world of information. Following this ternarism philosophy, the Web we understand becomes different world from what we normally think by the dualistic view. It tells that in the world of information, data reveals the encapsulation of information, service reveals the action and production of information, and link reveals the transportation (in contrast to connection) of information. Under this view, any

Thing in the information Web is composed by three fundamental elements---data, service, and link. The data elements contains the information, the service element enables the production of the information as well as the interaction of the information to the other things, and the link element determines whether or not the information being able to be passed to another Thing.

By the ternaristic view of the Web, when we say there is a link from Thing A to Thing B, it means that the information carried by Thing A can be directly transported to Thing B without the help of any other information carrier.

By the ternaristic view of the Web, when there are no links between Thing A and Thing B, it means that unless there are additional information carrier participated in the transaction, the information carried by A cannot be passed to B. Once properly the additional information carriers joins the protocol (possibly in both sides), a link in higher order can be established between A and B.

By the ternaristic view of the Web, there is always a link (i.e. a direct link in the classic mean) between any two things though the link is often in higher order, i.e., it is often not a binary link that involves only the two designated things.

The regular Thinking Space readers may have found that this ternaristic view of the Web is also influenced by

the quantum theory. Unlike that in the dualistic presentation of the Web we often need to perform an expensive computation to discover a link (concatenated by several direct binary links) between two objects in the Web, in the ternaristic presentation of the Web any two objects are directly linked, but possibly linked in varied orders. Moreover, I realize that we may directly apply many classic quantum theories to the Web if we start to think of the Web in the ternaristic view, which I will share later in the other posts.

Does the ternaristic view actually reveal the more intrinsic fact of the Web? I do not know. But there is one thing I feel certain.

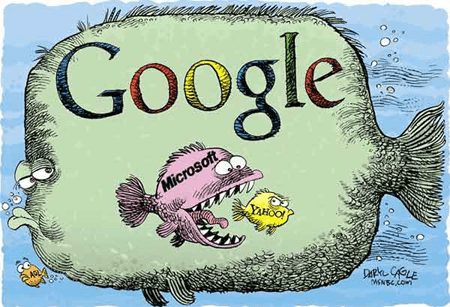

Link is not a simple issue. By better understanding the nature of link in the information world, we may eventually release the tremendous power of computation that we might not even imagine now. For the companies that aim to monetize linked data (such as Kingsley's OpenLink Software), it would be even more valuable for them to rethink the nature of the links that they are working against every day.